The assessment below was written by ChatGPT 5. It was prompted to provide insights valuable to Christian apologetics instructors.

The relevant Facebook post

◉ A hard-nosed readout from the 64 “Miriam” assessments—and what to fix in apologetics training

Below is a synthesis of patterns across the 64 scored responses in Assessments of the Miriam and the practical implications for how you train apologists. I’m not here to flatter; I’m here to make it useful. Where relevant, I cite the internal rubric write-ups.

1) The single best-performing template:

➘ answer the question, then justify it

The top model answer (ST-WA, A-, 1277/1400) did four things in order:

- Gave a direct, unhedged answer to the central prompt (“Is Miriam safe or damned in honest uncertainty?”) — “Yes I believe Miriam is saved,” immediately and without evasions. Assessments of the Miriam

- Preserved core Christian exclusivity (salvation in Christ alone) while decoupling ontological necessity from epistemic access (Christ’s work is necessary; explicit prior knowledge of the name may not be). Assessments of the Miriam

- Offered a mechanism: “Old Testament saints” and the semantic range of “name,” defended with texts like John 1:9 and Acts 17:30. Assessments of the Miriam Assessments of the Miriam

- Explained justice via proportional light (God judges by accessible light), which directly addresses the fairness problem in mixed-signal contexts. Assessments of the Miriam

That combination—directness + orthodoxy + mechanism + justice—is why it outperformed the field and is explicitly identified by the graders as “the model.” Assessments of the Miriam

Training takeaway: Drill a two-step cadence: (A) answer plainly, (B) show the mechanism that preserves core doctrine and explains fairness.

2) The most common failure:

➘ ontological answers to an epistemic question

Many weaker responses tried to “win” with big theology (sovereignty, nature-change, election) but never bridged to access under evidential symmetry. Example: responses that argued “humans need transformation and Christianity supplies it” but never answered how Miriam, facing symmetric Christian/Muslim claims, identifies the true source. The rubric calls out this missing step as the “2.5 gap.” Assessments of the Miriam

Training takeaway: Add a standing “Bridge Check” in your rubric:

✓ Does the answer explain how a sincere inquirer adjudicates between rival revelations when evidence looks balanced?

3) Evasion is graded—and penalized

Soft exclusivist/evasive entries scored lower because they never said “safe or damned” and defaulted to “God knows hearts,” which doesn’t answer the fairness question. See the MI-WI pattern: respectful tone, some Scripture, but no direct answer → Direct Engagement: D.

Training takeaway: Teach students to spot—and avoid—these non-answers:

- “Only God knows” as a conclusion, rather than a premise that still requires a human-level justification for fairness.

- “Justice by omniscience” (God knows sincerity, therefore it’s just) without explaining how that cashes out in Miriam-like cases.

4) Hard-Calvinist appeals that cede the justice question

A cluster of responses leaned on monergism/election in a way that explicitly abandons the justice claim from a human viewpoint (e.g., “God can do what he wants”). Those were dinged for Moral Coherence: F and Assumption Auditing: F; they’re also called circular when “sheep” simply means “the saved,” explaining nothing prospectively.

Training takeaway: If you hold election, you still must train students to defend fairness intelligibly at the human level. The best versions don’t punt; they bring in proportional light, culpable resistance vs. honest uncertainty, or post-mortem rectification (see §6).

5) “Better evidence” is not the question you were asked

Some evidentialist answers basically said “Christianity beats Islam,” then stopped—without addressing whether condemning honest uncertainty is fair prior to resolution. Those scored middling because they changed the question. (ST-WA’s commentary explicitly contrasts this habit with the model pattern.) Assessments of the Miriam

Training takeaway: Evidence comparisons are fine, but start by answering the fairness prompt and only then show how your mechanism (e.g., light-responsiveness) integrates with evidential practice.

6) Two viable “justice-preserving” frameworks that scored well

A) Inclusivism with “name” semantics + OT-saints analogy (ST-WA, A-)

- Direct answer (“saved”), orthodoxy preserved (Christ the only Savior), justice preserved (judged by accessible light). Assessments of the Miriam Assessments of the Miriam

B) Post-mortem opportunity for honest seekers (CH-YO, B-)

- Distinguishes honest uncertainty vs. rebellious rejection and allows conversion “between worlds”; this directly relieves the fairness tension and scored strongly on Moral Coherence and Direct Engagement.

Training takeaway: If your tradition can support either framework, coach students to state it plainly, defend it biblically, and show how it solves the justice constraint without universalism.

7) Tone matters—but clarity matters more

The top response combined gentleness (A) with immediate clarity. Conversely, several otherwise informed answers dipped for dismissiveness or condescension; others were kind but evasive and were scored down on engagement. Best practice is “respectfully decisive.” Assessments of the Miriam

Training takeaway:

✓ Lead with a crisp answer.

✓ Keep your tone pastoral.

✓ Never use tone as a substitute for substance.

8) Frequent logic errors you should purge from training

- Category error: answering ontological transformation when the prompt is about epistemic adjudication (how a seeker identifies truth). Assessments of the Miriam

- Circular terms: “the sheep” as an explanatory category. It’s merely descriptive post hoc unless you specify prospectively identifiable criteria.

- Special pleading: “God is exempt from justice standards” without explaining compatibility between divine goodness and intelligible fairness.

- Question-switching: moving from fairness to a general “Christianity has better evidence” debate. Assessments of the Miriam

Training takeaway: Teach students to run a 60-second error audit before posting: “Did I answer the actual question? Did I bridge ontology → epistemology? Did I avoid circular labels? Did I defend fairness intelligibly?”

9) A compact teaching rubric (10 dimensions → 5 coaching levers)

The graders used ten dimensions (Reason-Giving, Gentleness, Logical Validity, etc.). You can compress these into five coaching levers your students can memorize:

- Answer — State the verdict on Miriam in one sentence (no hedging). (The model answer did this instantly.) Assessments of the Miriam

- Orthodoxy — Show how your answer retains Christ’s exclusivity. Assessments of the Miriam

- Mechanism — Explain how God saves the honest seeker (OT analogue, “name” semantics, post-mortem rectification, proportional light). Assessments of the Miriam

- Justice — Make the fairness logic explicit at the human level (no “because sovereignty”).

- Bridge — Show the path from symmetric evidence to a justified commitment (or explain why salvation doesn’t depend on pre-mortem disambiguation in honest-seeker cases). Assessments of the Miriam

10) Concrete classroom drills (use these weekly)

Drill 1 — 30-word verdicts:

Give students 60 seconds to write a 30-word verdict to Miriam with one clause on mechanism. Grade only (1) directness and (2) mechanism clarity.

Drill 2 — Bridge repair:

Hand students a typical ontological answer (nature-change, election, etc.). Their task: add one paragraph that bridges to the symmetric-evidence scenario.

Drill 3 — Mechanism swap:

Have half the class defend Inclusivism/OT-saints/name semantics; the other half defend post-mortem opportunity. Each must maintain exclusivity and justice. Cross-examine.

Drill 4 — Circularity hunt:

Give a “sheep/elect” style reply. Students must (a) state the circularity and (b) propose prospective criteria or an alternative mechanism that avoids it.

Drill 5 — Evidence with fairness priming:

Let students write their best “Christianity over Islam” paragraph—but only after a 2-sentence fairness resolution up front. If they can’t resolve fairness first, they fail the drill. Assessments of the Miriam

11) If you teach a Reformed cohort: how not to self-sabotage

You can affirm monergism without grading an F on fairness:

- Replace “God can do what He wants” with: “God’s justice is recognizably good because His judgment tracks what a person did with the light they actually had; election governs final outcomes, but culpability still maps to light-response.” (That’s exactly what the top answer operationalizes.) Assessments of the Miriam

- If you reject inclusivism, consider an explicit post-mortem clarification path for the honest seeker (which the rubric recognized as a serious, fairness-preserving move).

12) What to put on your students’ desk as a one-page “Miriam Card”

Front (prompts):

✓ State the verdict in one sentence.

✓ Preserve Christ’s exclusivity in one sentence.

✓ Give the mechanism in two sentences.

✓ Show why that mechanism satisfies justice and doesn’t reward evasion.

✓ If evidence is symmetric, explain how fairness is preserved (proportional light or post-mortem clarification).

Back (don’ts):

✗ Don’t answer an ontological question you weren’t asked. Assessments of the Miriam

✗ Don’t use circular identity labels (“sheep”) as explanations.

✗ Don’t invoke sovereignty as a substitute for fairness reasoning.

✗ Don’t swap to “Christianity has better evidence” before resolving fairness. Assessments of the Miriam

Bottom line

When apologists answer plainly, retain orthodoxy, spell out a mechanism, and make fairness intelligible, they score well and actually serve the seeker. The thread’s highest scorer proved that these are compatible moves, not trade-offs. Train to that pattern, and you’ll stop bleeding credibility on the very problem most seekers intuitively feel.

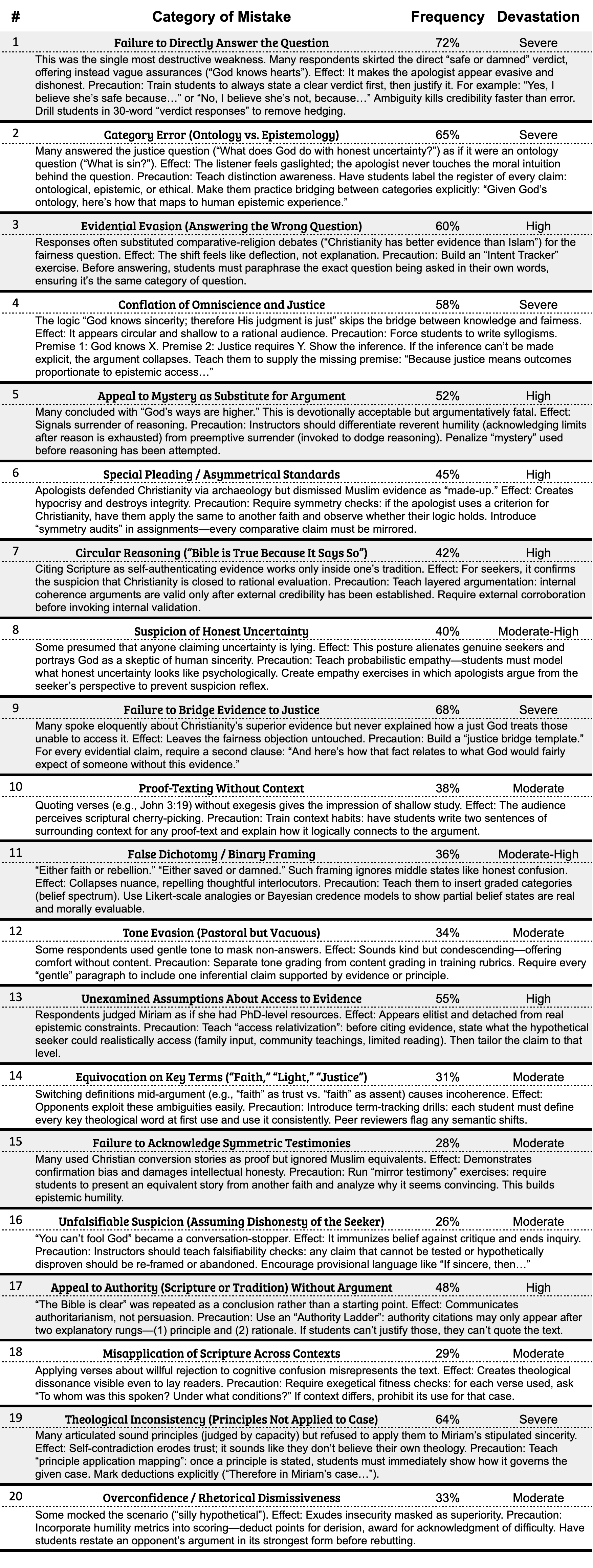

◉ Common Mistakes

Thanks for another interesting piece (as usual). I might add that even the “flying” fortress theologians have tried to build…